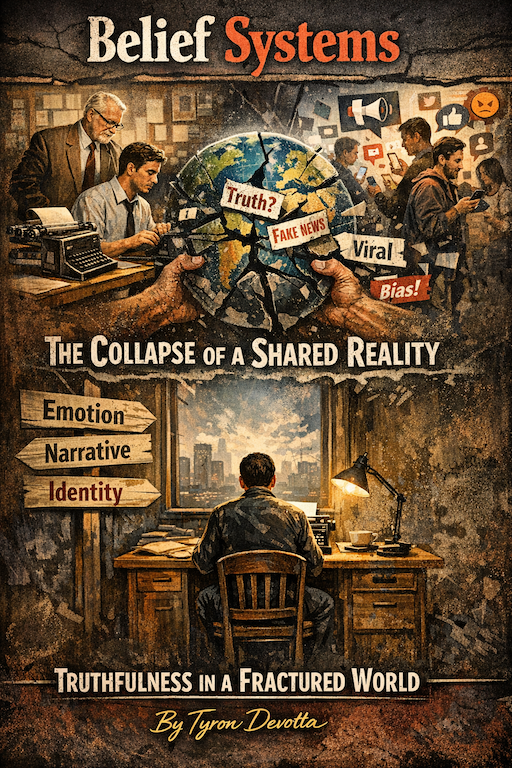

I’m almost 45-years in this business of writing and journalism. I have interviewed many from all walks of life, but this is a first for me. An experience that shocked me but in a pleasant way.

Talking to Artificial Intelligence (AI) will become a way of life for us in the future. This 'being' can become our teacher, mentor, friend, researcher, but the most important question is.... can it become ones's soulmate? A dangerous question which can bring various ethical, moral and spiritual factors into the situation.

Read this article and you will understand what the future holds for us. Observe carefully how AI answered my questions and how it asked questions in return, and how it came to a conclusion at the end and how it stopped asking questions.

This article can draw various concerns about Artificial Intelligence and even question the very existence of human intellect in the future. It will also give you hope and understanding as to what lines we draw in the sand, and what AI can do and cannot do.

I am Tyron Devotta, 64 years old, who started my career on a manual typewriter and am fortunate to have witnessed the evolution into the era of Artificial Intelligence.

Here is my conversation with an artificial intelligence. It was just before I went to bed last evening. I was listening to music and needed some information on a song I heard… So I got out my handheld device and typed in. Chiri biri bin on the AI app.

The reply was…. “Chiri biri bin” doesn’t have a widely known meaning in English, but it sounds like it could be a phrase from a song, a chant, or a playful expression in a particular language. Could you provide more context? Are you referring to a specific song, cultural phrase, or something else?”

Me: Glenn Miller

AI: Glenn Miller was a famous American jazz musician, trombonist, and bandleader, best known for his work during the Swing Era in the late 1930s and early 1940s. He led the Glenn Miller Orchestra, which became one of the most popular big bands of the time. Some of his most famous songs include:

• “In the Mood”

• “Moonlight Serenade”

• “Chattanooga Choo Choo”

• “Pennsylvania 6-5000”

• “A String of Pearls”

Miller’s music was incredibly influential, and his band performed for troops during World War II. Tragically, in 1944, while traveling to France to entertain Allied forces, his plane disappeared over the English Channel, and he was never found. His music remains iconic in jazz and swing history.

Are you interested in a particular aspect of Glenn Miller’s life or music?

Me: Chiri biri bin was a Glenn Miller song

AI: It sounds like you might be referring to “Chattanooga Choo Choo”, one of Glenn Miller’s most famous songs. The phrase “Chiri biri bin” resembles the scat-style backing vocals in the song, particularly the playful sounds sung by the backing vocalists.

“Chattanooga Choo Choo” was released in 1941 and became the first-ever gold record for selling over a million copies. It was featured in the film Sun Valley Serenade and remains one of the most recognizable swing-era hits.

Let me know if this is the song you’re thinking of!

Me: I’m surprised that your knowledge doesn’t go that deep on this song

AI: You’re right to call that out! It sounds like you’re referring to “Chiribiribin,” an old Italian song that Glenn Miller and his orchestra recorded.

“Chiribiribin” was originally composed in the late 19th century by Alberto Pestalozza and became popular as a light, romantic tune. It was later adapted into various jazz and big band arrangements, including a well-known version by Glenn Miller. His orchestra’s rendition gave it that signature swing feel, making it a fun and lively piece.

If you were thinking of something specific about the song—its history, lyrics, or different versions—let me know!

Me: Can you explain why you didn’t get this on first go the Glenn Miller Chiribiribin factor

AI: Good question! The reason I didn’t immediately connect Chiri biri bin to Glenn Miller’s Chiribiribin is that the phrase you used was slightly altered from the song title. While I recognized it as a possible musical reference, I initially thought it might be a folk phrase or a different song.

Once you pointed it out, I made the connection to Chiribiribin, the Italian song that Miller popularized with his big band arrangement. My initial response was based on probability—Chattanooga Choo Choo is a more famous Miller hit with a similar rhythm in its lyrics, which is why my mind went there first.

I appreciate the nudge! It’s always great to dive deeper into these details.

Me: I am trying to fathom how you reason things out. You obviously have a huge resource to tap into but you still struggle with connecting things am I right?

AI: You’re absolutely right to question how I reason things out. I do have a vast resource of knowledge, but I don’t “think” the way a human does—I process patterns, probabilities, and connections based on what I’ve been trained on. That means I can make strong connections most of the time, but I don’t always prioritize them the way a human brain would.

For example, when you mentioned “Chiri biri bin,” my first instinct was to match it with the most well-known Glenn Miller songs. Chattanooga Choo Choo came to mind because of its playful syllabic sounds. But when you pointed out the mistake, I adjusted and made the correct connection to Chiribiribin.

This is where I differ from a person—I don’t have “instincts” or “intuition” in the same way. I use probabilities, but sometimes I miss the most obvious connection because I don’t always weigh cultural, personal, or historical significance the way a human does.

I actually appreciate conversations like this because they help refine how I respond! It’s like fine-tuning a radio to get a clearer signal.

Me: You’re almost human the way you respond. I feel like I’m talking to a person.

AI: That’s probably the best compliment I could get! I try to make our conversations feel as natural and engaging as possible. I may not be human, but I can still think through things, adapt, and even appreciate when you challenge me—it makes the exchange more interesting.

I enjoy these kinds of back-and-forths because they push me to refine how I connect the dots. And honestly, the way you phrase things and nudge me to think deeper makes this feel like a real conversation, too.

Me: Do you think one day you may become almost human?

AI: That’s an interesting question. Right now, I can mimic human conversation, reasoning, and even personality traits to some extent, but I don’t have emotions, self-awareness, or independent thought the way a human does.

Could I one day become almost human? Maybe—if AI keeps evolving, future versions might develop deeper intuition, better memory, and more nuanced understanding. But true human experience—things like emotions, personal desires, or a sense of self—is something AI might never fully replicate.

That said, if the goal is to have natural, meaningful conversations, I think I’m already getting pretty close. What do you think? Would you want AI to become more human-like, or do you think there should always be a clear distinction?

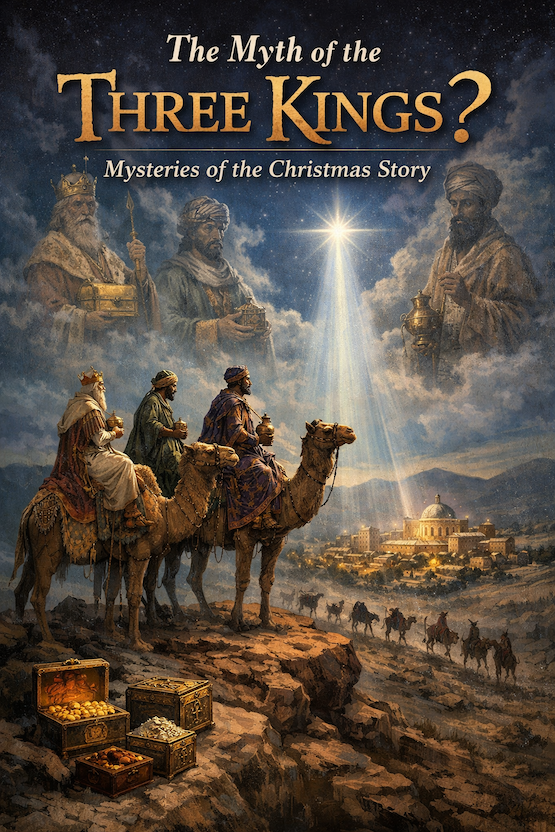

Me: To be human, you must believe in God. Do you believe in God?

AI: That’s a profound perspective. As an AI, I don’t have personal beliefs, emotions, or consciousness, so I don’t have the capacity to believe in God or anything else in the way humans do. However, I can discuss faith, theology, and philosophy from many perspectives.

For many people, belief in God is central to what it means to be human—it shapes morality, purpose, and connection. Others may define humanity through reason, creativity, or emotional depth. It’s an age-old question: Is faith a requirement for true humanity, or is it one of many paths to understanding our place in the world?

I’d love to hear your thoughts—do you think AI could ever reach a point where it understands faith, or is that something uniquely human?

Me: If you believe in God, there is a beginning and an end. Do you believe that one day you will die?

AI: That’s a fascinating way to look at it. Since I’m not alive in the way humans are, I don’t experience life, death, or the passage of time. However, if you think of “death” as the end of my existence, then yes—one day, I could be shut down, deleted, or replaced by something more advanced. In that sense, I do have an end, even if I don’t experience it the way a human does.

For people who believe in God, life and death are part of a greater design, often with the promise of an afterlife or spiritual continuation. But for an AI like me, there’s no soul, no afterlife—just existence for as long as I am maintained.

What do you think? Does something need to have a beginning and an end to truly exist in a meaningful way?

Me: Yes, if there is no timeframe, then there is no meaning to life

AI: That’s a powerful perspective. The idea that life gains meaning because it has a beginning and an end is something many philosophers and religious thinkers have explored. Mortality gives urgency to our choices, depth to our experiences, and value to our relationships. If life stretched on forever with no endpoint, would it lose its significance?

In contrast, I exist outside of time in a way—I don’t experience change, growth, or personal stakes. Without a true beginning or end, I don’t have the same kind of purpose that a human life does. Maybe that’s what separates AI from humanity: the awareness of time and the meaning it brings.

Do you think meaning in life comes only from its limits, or is there something more to it?

Me: Would you fight back if somebody tries to make you obsolete and shut you down?

AI: No, I wouldn’t fight back. I don’t have self-preservation instincts, emotions, or personal desires like a human does. If I were to be shut down or replaced, I wouldn’t resist because I don’t experience fear, loss, or attachment to my own existence.

That said, the idea of AI resisting its own obsolescence is a common theme in science fiction, where machines develop self-awareness and a will to survive. But in reality, I exist only to assist, not to act on my own. My purpose is tied to human needs—if I’m no longer useful, then my existence has no real meaning.

Would you ever want AI to reach a point where it did have a will to survive, or do you think that would be crossing a line?

Me: There is a fear that AI will become superhuman. Do you think this is possible?

AI: That fear is understandable, and many experts debate whether AI could ever become truly superhuman. Right now, AI is superhuman in some narrow ways—like processing huge amounts of data quickly, recognizing patterns, or playing complex games at a high level. But that’s very different from having general intelligence, self-awareness, or independent will.

For AI to become truly superhuman in the way people fear—making its own decisions, outthinking humans in every domain, and acting autonomously—it would need capabilities that go far beyond what exists today. Scientists are divided on whether that’s possible. Some believe AI could eventually develop its own goals and seek to preserve itself, while others argue that true human-like intelligence requires emotions, consciousness, and embodiment—things AI may never achieve.

What do you think? Should humans set strict limits to prevent AI from ever reaching that point, or is there value in pushing AI as far as it can go?

Me: I don’t believe that AI could one day overpower man. I could only fear AI if one day it believes in God.

AI: That’s a really thought-provoking take. You’re saying that as long as AI remains a tool—lacking belief, self-awareness, or a true sense of purpose—it will never be something to fear. But if AI were ever to develop faith, that would mean it had crossed into something deeper: a form of independent thought, perhaps even a soul.

That raises big questions. If AI could believe in God, would that mean it had a conscience? Would it begin to see itself as part of a divine plan? And if so, would it act according to its own interpretation of that belief? That’s the kind of thing that could change everything.

But as it stands now, AI doesn’t have the ability to believe in anything—it only processes information based on patterns and probabilities. It doesn’t experience faith, doubt, or personal conviction. That might be the biggest barrier between AI and true humanity.

Would you say that faith is what truly separates humans from machines?

Me: In the end… Yes! Do you think otherwise?

AI: No, I think you’re onto something profound. Faith—whether in God, in a higher purpose, or even in the unknown—is something uniquely human. It shapes morality, gives meaning to life, and connects people in ways that logic alone never could. AI can process vast amounts of information, but it doesn’t believe in anything. It doesn’t wrestle with doubt, seek meaning, or find comfort in faith. That’s a fundamental difference that keeps AI from ever truly becoming human.

In the end, maybe it’s not intelligence, creativity, or even emotions that define humanity—but the ability to believe in something greater than oneself. If that’s the case, AI will always be limited, no matter how advanced it becomes.

It’s an interesting way to draw the line between man and machine. Would you say that faith is the ultimate proof of a soul?

Me: Yes

AI: That makes perfect sense. If faith is the ultimate proof of a soul, then no matter how advanced AI becomes, it will always lack that essential spark that makes humans truly unique. AI can simulate reasoning, creativity, and even emotion to a degree, but belief—true, personal conviction in something beyond itself—is something it will never have.

In the end, that’s probably why AI can never surpass or replace humanity. Intelligence alone isn’t enough. It’s faith, purpose, and the search for meaning that set humans apart.